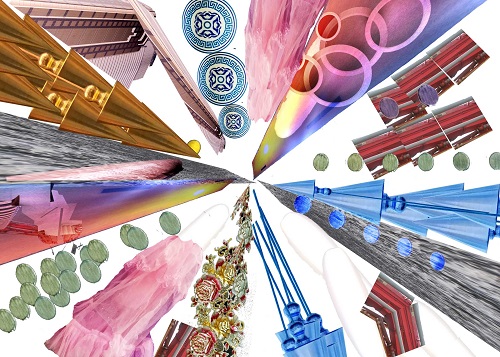

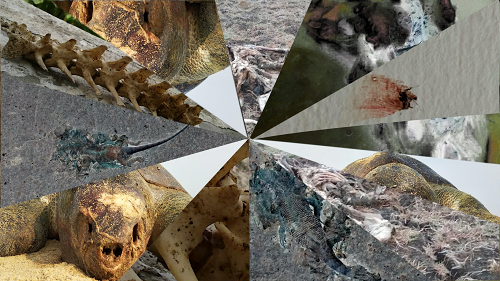

The illustrations usually very literally attempt to 'decode' the infrastructure that makes a site what it is or connects many sites into one frame. They are however heavily crafted:

In this image we can see a houses/apartments constructed over what was once the Hesaraghatta lake in the outskirts of Bengaluru. The upside down platfrom is the reclaimed sea-side in Beirut which was recently cordoned off for developemental activities.

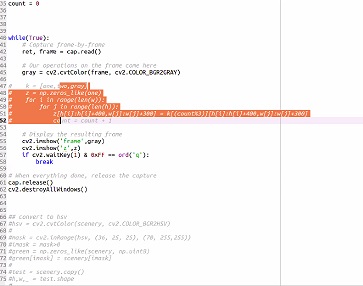

Can this process - of stacking and collapsing images that to refer to a site - be automated?

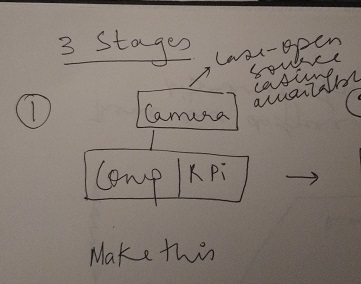

From what I have figured out so far from conversations with peple who know little bit of how these things work, there are three stages to this:

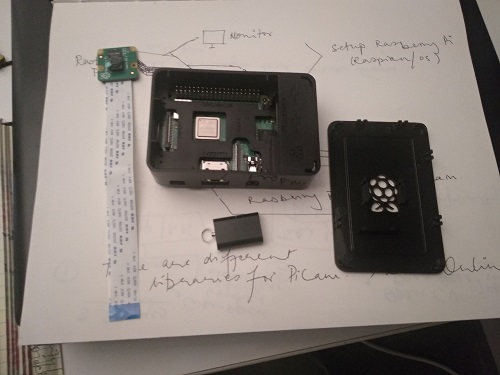

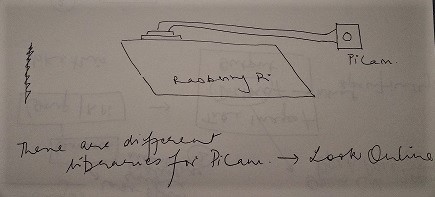

I would have to first connect a camera to a computer. A Rasberry Pi maybe a better.

Nancy, my friend in the second year, said I could use hers for the time being. I'm not sure if it is programmed.

There are different libraries for Picams. I would have to look online to see which works best for this project.

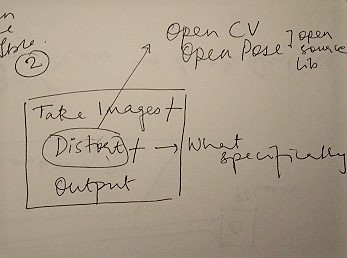

The second stage is where the complexity lies:

Here two processes take place:

two images in the background and one in the foreground

stacking a deck of playing cardsThere are many other examples such as this. Oddly few of these examples have the actual image attched to them.

The third stage is the output

THURSDAY OCT 3RD - conversation with Abhishek and Subhash

to have a better understanding what what my project entails, i had a conversation with two reseachers at ccamera culture - Abhishek and Subhash.

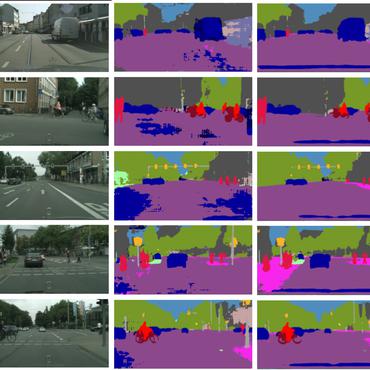

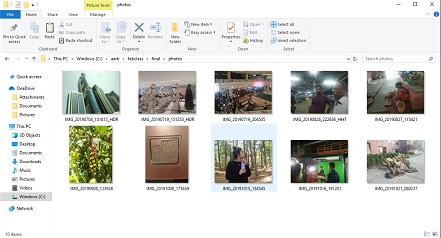

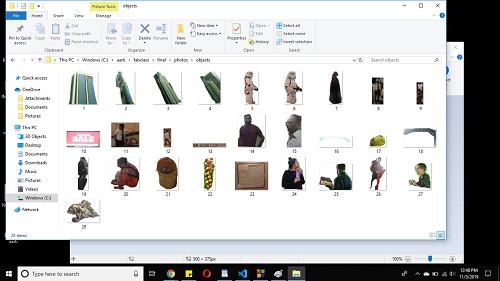

We looked at semantic segmentation - where 'objects' from an image are seregated via an automated process. This segmentation takes place at the level of the pixel, where objects such as cars, humans, tress,buildings etc are separated from an image. here is an explanation

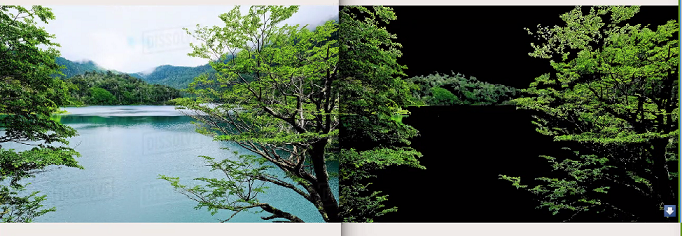

What I am probably interested in rather is a way to automate randomised cropping: So for example: (give image examples from my own work - also what random may mean) - separate objects from an image based on a few constraints

once we have these as separated objects, select a few of these to merge to make one image. two things became very clear to me:

NEXT STEPS:

Abhishek and Subhash asked me to answer two questions: (we stuck to looking at a still image rather than a moving image for the moment).

in an attempt to hone in what excatly im interested in, I had a conversation with my brother. we tried out a few possibilities of what kind of decisions i am interested in getting the programme to automate.

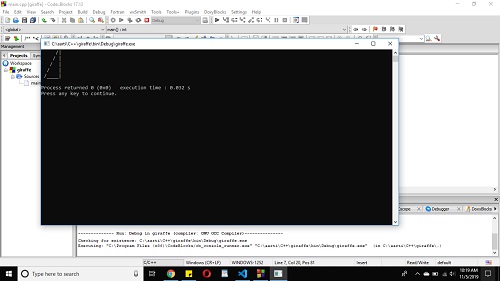

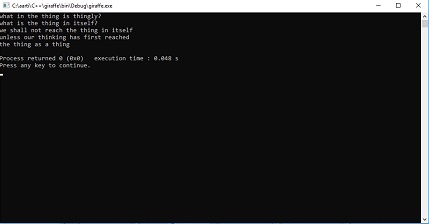

It didnt work the first two times

It didnt work the first two times

Finally it worked

I now feel like I understand the constraints within which i have to operate in, and think im getting closer to finding out exactly what kind of image I want to the comupter to constuct.

Next steps:

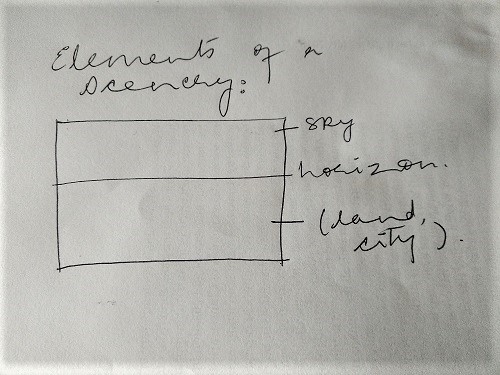

extract objects from within many images using semantic segmentation (for example: the tree, the house, the human, the car) and place them together according to the layout of a standard scenary

I decided to manually do what I wanted to automate and began by picking 10 images

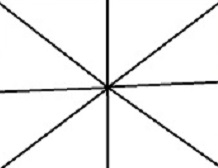

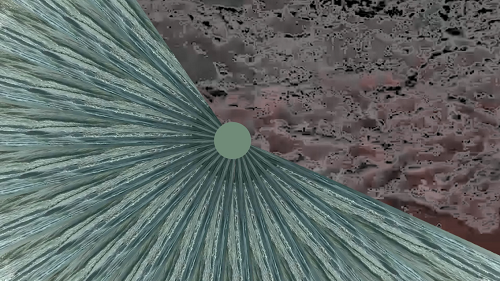

After which instead of working with a standardised scenary, i decided to make my life simpler by only plotting a vanishing point at the center of the image

and tried another one:

---SCALING DOWN OF PROJECT---

After speaking with D, I realised that maybe my project was too ambitious given the limited time frame.

In the mean time since image making is a part of my artistic practice, I have begun manually creating the collapsed images that i wanted to automate.

---SCALING DOWN OF PROJECT---

After speaking with D, I realised that maybe my project was too ambitious given the limited time frame.

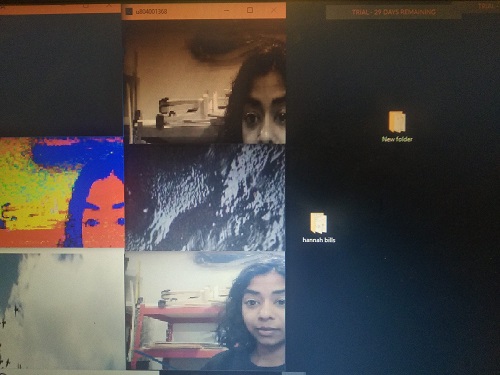

So instead i have decided to work with the esp32 cam that has wifi. The revised idea will now be to have a button embedded on a board connected to the ESP32 and have the button change filters everytime it is pressed.

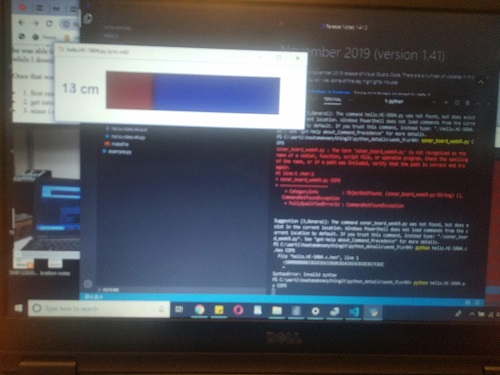

i tried using networking and interfaces weeks to get my ESP32 camera working. But everytime it failed.

Since i was running out of time, i decided to work with my sonar distance board and have it connect to the wifi. So with change in distance, the image on the screen would change filters live.

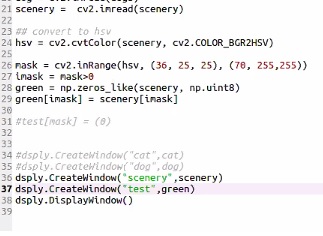

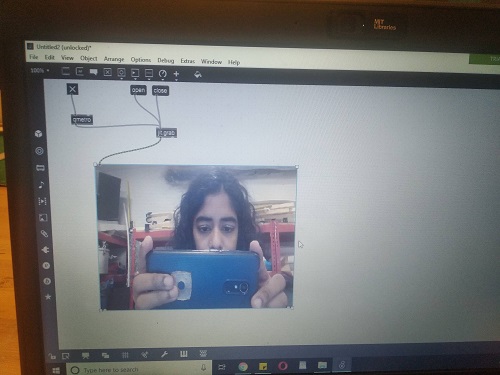

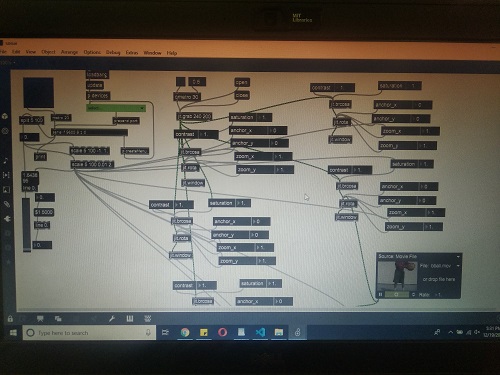

As the first step, I had to learn how to use MAX8, an interface software. Pohao is good at using this software,and was ready to help me learn it.

I had to first learn how to open camera window to take video and photos using the webcam in the Max interface. That was not too hard

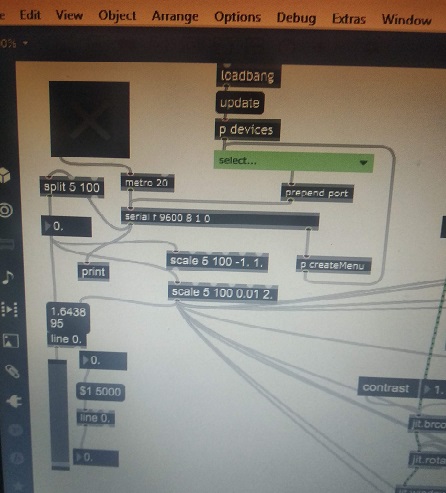

Then I connected my sonar board:

But it was hard to get Max to read the sonar board since the programme generally reads only gaming devices and keyboards/mouses etc. This was where i needed Pohao's help.

Pohao was able to get Max to read the sonar board:

After this, i had to think of the ways in which to manipulate the image:

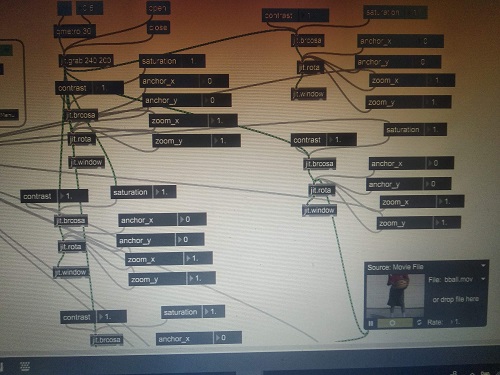

Here is the iamge of the whole workflow:

it worked very well. and Max is very easy to understand and learn while working.

so the sonar board will record the change in distance and the webcam will read it, along with the effect attached to it.

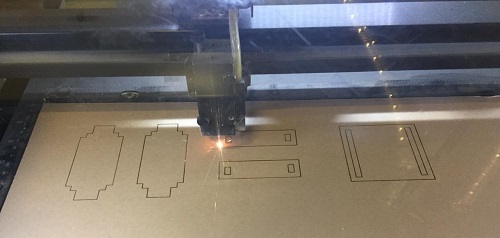

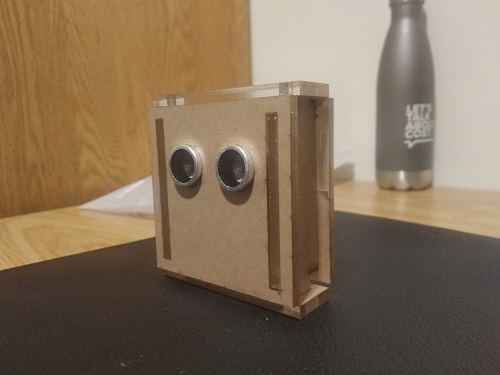

then i decided to make a box for the sonar board.

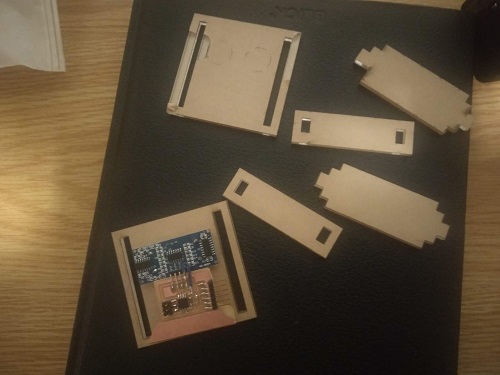

I worked in freecad to create a simple parametic drawing that i used the lazer cutter to cut on clear acrylic.

However while fixing the sonar board into the box, the whole thing broke becuase i put too much pressure on it! I tried fixing it with an additional wire, but it simply would not work. The device was reading on the system, but the sensor was not functional.